This blog post is part of a series based on the paper “Ten simple rules for large-scale data processing” by Arkarachai Fungtammasan et al. (PLOS Computational Biology, 2022). Each installment reviews one of the rules proposed by the authors and illustrates how it can be applied when working in Terra. In this installment, we tackle a topic that never fails to generate strong opinions: automating data processing with workflows.

“Automation in the form of robust pipelines described as code is a key foundation for success of large-scale data processing as it demonstrates it is technically possible to process the data without intervention.”

Arkarachai Fungtammasan & co waste no time getting to the heart of the matter on this one: if you’re going to be running any kind of multi-step data processing on more than a trivial amount of data, you’re going to have to automate the process. How? By creating a workflow script (which people often call “pipeline”) that describes the sequence of operations in a way that can be run end-to-end without human intervention by a workflow execution system.

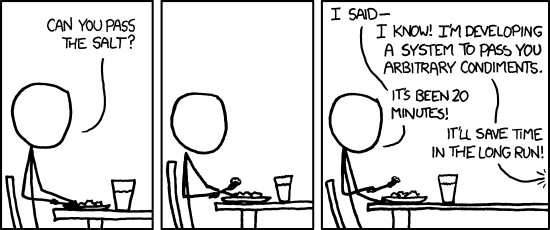

Does that mean everything you ever do has to be a workflow? No. But anytime you find yourself running sequential commands that are predictable/repetitive (with mostly the same settings every time), where some operations could be run in parallel, and individual steps typically take more than a few minutes to run — that should probably be a workflow.

It does take some effort to create workflows in the first place. On the bright side, automating data processing work frees you up to do more interesting tasks that are a better use of your brainpower, reduces human error and can massively increase reproducibility.

XKCD Comic by Randall Munroe, https://xkcd.com

Also, remember Rule 1: Don’t reinvent the wheel. There are many groups and projects that share their workflows, either in their own github repositories or in community repositories like Dockstore. The Terra Showcase provides a number of public workspaces containing workflows for common analyses like whole genome alignment and variant calling, single-cell data processing and more, preconfigured to run out of the box on example data.

Make sure to use a robust, scalable workflow system

One point the authors make about automation being crucial for large-scale data processing is that it’s not just any form of automation:

“[…] it is important to use or implement workflow systems that record key elements of data and processing so that one can programmatically rerun processing where required.”

In addition to the basic capability of executing a series of commands in a certain order, you also need some additional functionality, such as systematic logging for provenance and reproducibility (tying back to Rule 2: Document everything), so you’re going to want to use a system that’s designed for that specific purpose.

Some will object, arguing that it’s possible to write the necessary functionality into a “plain” bash or python script. But that amounts to a lot of extra work that you could avoid by using a dedicated workflow system that has those features baked-in, and scalability will likely remain limited.

The paper lists the four current favorites in this space:

“There are workflow tools that can support many use cases including workflow languages and systems such as Workflow Description Language (WDL) [12], Common Workflow Language (CWL) [13], Snakemake [14], or Nextflow [15].”

The authors recommend choosing a system that is “compatible with chosen computing platforms for both technical and compliance reasons.” Certainly, if you’re tied to a specific platform, then your choice is constrained by what is available there. However, that may still leave you with multiple options to choose from. For example, Terra currently supports the WDL language (via a built-in Cromwell engine) as well as Galaxy workflows, and there is work underway to expand that range to additional workflow languages in order to provide researchers with more options to suit their needs and preferences.

A track record of success

Scalable workflow execution has been a core capability for Terra from its inception, and is probably its most mature and widely used feature. It’s been very gratifying to see a wide range of projects use it with great success, from single-lab studies to massive undertakings like the All of Us Research Program, which recently released nearly 100,000 whole genomes that were processed on Terra.

One of my favorite such success stories is the Telomere-to-Telomere (T2T) variant calling study by Samantha Zarate and collaborators in the Schatz Lab at Johns Hopkins University. They used Terra through the NHGRI AnVIL to measure the benefits of using the T2T version of the human reference genome to call variants on data from the 1000 Genomes project, which involved realigning and reanalyzing 3,202 whole genomes. As Samantha described previously on this blog, they estimated it would take months, possibly up to a year, to run all the computations on JHU’s institutional cluster. Instead, it ended up taking them about a week to run everything on Terra once the workflows were in place:

“The push-button capabilities of Terra let us scale up easily and rapidly: after verifying the success of our WDLs on a few samples, we could move on to processing hundreds or thousands of workflows at a time. It took us about a week to process everything, and that was with Google’s default compute quotas in place (eg max 25,000 cores at a time), which can be raised on request.” — Samantha Zarate, Terra blog

To me this really underscores the importance of choosing a system that allows you to scale up your output without increasing your workload.

There are some other really important features of scalable workflow systems that I’m itching to get into, such as monitoring, resource management, performance optimization and so on, but they are covered by their own “simple rules” later in the paper — so stay tuned for the next installments in this series!

In the meantime, feel free to check out our Workflows Quickstart if you’d like a fast, hands-on introduction to running workflows in Terra, as shown in the 4-minute video below.