This blog post is part of a series based on the paper “Ten simple rules for large-scale data processing” by Arkarachai Fungtammasan et al. (PLOS Computational Biology, 2022). Each installment reviews one of the rules proposed by the authors and illustrates how it can be applied when working in Terra. In this installment, we dig into the importance of testing tools and pipelines before applying them on novel data, and we highlight how Terra’s workflow system enables efficient end-to-end testing in practice and at scale.

Finally we get a chance to pick up this series again! We had left things off with Rule #4: Automate your workflows, which is one of my favorite topics to write about because so many people are still missing out on the benefits of automation. This next rule is in some ways an extension — or perhaps a specific application — of the concepts we discussed previously.

With this fifth rule, Arkarachai Fungtammasan and colleagues make yet another unimpeachable point:

“A key element of a large-scale data processing effort is a robust set of test examples that can be easily run.”

My only quibble is that I’d love to see the purpose stated a little more explicitly. There are multiple things you can achieve by running tests on your analysis tools – from ferreting out basic programming errors (bugs) to handling weird edge cases and malformed data. Based on the specific points they raise, I assume that the main purpose the authors have in mind for testing here is to ensure that running the end-to-end analysis produces correct results across a range of input conditions (shallow vs deep sequencing etc).

“If the goal is to process sequencing data, including studies with particularly deep sequencing and particularly shallow sequencing can be wise. In sequencing data, it is possible that reads may be sorted or in arbitrary order: make sure that variable patterns that can be expected in valid input are covered by tests. If files can be derived from multiple sources, using different technologies, or are in multiple formats, include examples of each possible case.”

In short, this amounts to saying “before you run anything on new data, you should run it on known data of the same type”. A very sensible recommendation, and one that could save you a lot of time and money that you might otherwise spend learning a painful lesson about obscure data type-specific parameters.

In the last paragraph, the authors also tease the question of handling errors that arise when running in production, which they later cover more in detail in “Rule 9: Learn to recover from failures”. Briefly, the idea is that when something inevitably fails because of an edge case, spewing invalid output – or no output – or “hanging” indefinitely (where the analysis no longer progresses but the software does not exit), you want to have some predetermined logic to handle the problem efficiently, either in the code or in your post-analysis process:

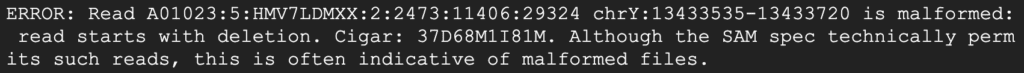

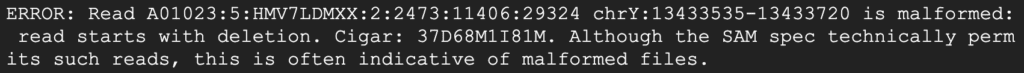

“It is also wise to test with invalid inputs and cases that are expected to fail such as inputs that are too large, too small, or malformed. Determining what errors are observed and using these error states to annotate inputs with unknown validity can help to triage data that fail to process.”

So in that case it’s not so much about testing tools or pipelines to address correctness issues before deploying them into production; it’s about identifying what could fail due to issues in the data itself and determining how to handle those failures.

Of course you may not be able to plan for every possible eventuality — every so often, someone invents a new sequencing technology that comes with exciting new error modes – and therefore, over time, you will probably have to add new test cases to your test suite:

“Be prepared to extend the test set as new edge cases present themselves.”

That adds an important requirement to how you’re going to do the testing: it has to be relatively easy to add a new test case to your test suite without disrupting ongoing production work.

Which brings us to the “how do you do this stuff in practice” part of the discussion.

Doing it in practice and at scale with Terra

Folks with a background in software engineering will probably say, oh, we have standardized tools and approaches for doing this sort of thing. For example, there are “continuous integration” tools that can be configured to pull code from github and run preconfigured test suites. That is something larger, software development-focused teams (like the GATK team) rely on extensively for testing their code. However, the tools involved can be difficult to set up if you don’t have a software engineering background, which is the case of many bioinformaticians whose job is not to write new tools but to apply existing tools appropriately to scientific analysis use cases.

Another problem is that those test suite tools are often designed more for small-scale testing of individual steps than for full-scale testing of end-to-end analyses. It can be difficult to apply them to use cases like running a whole genome analysis pipeline on a battery of different sets of inputs. That’s often done manually, which sets constraints on the amount and complexity of test variables you might be willing or able to consider including in your test suite.

In contrast, Terra lends itself very well to this kind of end-to-end testing. You can set up as many different input configurations as you like, for individual workflows or even workflows of workflows (yay imports). Since the workflow system handles all aspects of execution on the cloud, including massive parallelization and linking to outputs in data tables, maintaining and executing a comprehensive test suite becomes accessible to just about everyone regardless of background.

“But Geraldine,” I hear some of you say, “I don’t want to have to set up dozens of individual runs of pipeline tests through a graphical interface. It’s too much clicking!”

The good news is that you don’t have to go through the web interface to either set up or run your test suite. You can write scripts in either Python or R to manage test data, set up workflow configurations and launch workflows programmatically using a library called FISS, as described in the Terra documentation.

We know of multiple groups who use this capability to run end-to-end tests of their software tools and pipelines at scale; some even use git actions to trigger test runs automatically when they commit or release new code in Github.

If you’re interested in trying this out, check out the doc and the FISS Tutorial workspace, which includes several notebooks detailing how to use FISS in practice.

Note: At time of writing, the tutorial for setting up and launching workflows using FISS is still in development. However the workspace and data management tutorials are already complete and are a great way to get started with FISS and the Terra API.